Methodology update

Methodology update

One of the defining features of the EU referendum has been the disparity between online and telephone polls. As anybody following the campaign will be able to tell you, the answer to “what do the polls say?” is far from straightforward.

However, one illuminating attempt to do so was a paper, mentioned in an earlier post on this site, by Matt Singh and James Kanagasooriam which compared the results of some online and telephone polls.

A similar comparison by ICM, conducting parallel telephone and online polls, produced different results despite the same sample frame and weighting techniques being used, though a later effort showed this gap closing by itself.

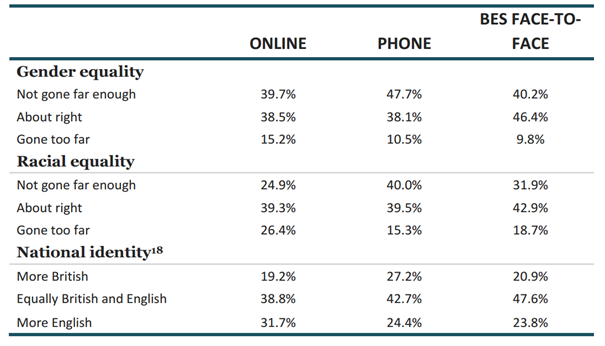

The crucial table in the Singh / Kanagasooriam paper was this one showing the answers to several questions about general social attitudes when asked via online survey, telephone survey and in the British Election Study face-to-face study, the last of which is the closest we have to a ‘gold standard’ in survey research.

The takeaway from this is that, despite virtually identical demographic and political sampling and weighting, telephone samples produced answers which were more socially liberal while online samples produced answers which were more socially conservative. Our internal research showed a similar set of results to the online results here and, sometimes, an even more extreme disparity vs. telephone polls.

The reason these differences matter is that while they do not have a clear impact on which party people say they support (though naturally they will affect things below the surface and UKIP’s level of support in particular), they do have a direct impact on whether a person thinks Britain should remain in or leave the European Union. We can end up with samples that have too many small-c conservatives even though we have the right number of Conservatives which means that we may have the vote shares broadly correct for parties but can still be wrong on issues that cut across party such as this referendum.

The Sturgis inquiry after the failures of the industry at the 2015 election pointed to the issue of unrepresentative samples and, as the ICM experiment shows, telephone and online polls are reaching two different audiences. In the absence of time or budget for a randomly sampled face to face survey, we must make do with what resources are available and turn to the last randomly sampled face to face survey: the British Election Study.

One of the questions asked when polls produce a clear modal difference, as is present here but was absent in 2015, is whether interviewer-effect is playing a part in generating different results. This is why it is interesting that telephone samples produce more socially liberal results than the BES face-to-face survey. In theory, as the respondent moves from in-person contact with an interview, through the more distant medium of the telephone and finally to the impersonal environment of an online survey, interviewer effect should play less of a role and a respondent’s “true” feelings should be more accurately reflected.

However, that the most socially liberal answers come from telephone surveys suggests that the ‘true’ state of public opinion on these issues is closer to the BES result and that the differences are more influenced by sampling. The easiest people to reach online are a bit too socially conservative, the easiest ones to reach by telephone are a bit too socially liberal. Both types of poll have fast turnaround times of a few days whereas the BES can be conducted over several months. This means that online and telephone polls have to take whoever they can get (within an overall sample frame) while the BES can select precise respondents and make multiple attempts to contact them, resulting in a more representative overall sample at the cost of a significantly longer timespan.

Our referendum-specific solution

In theory therefore we could simply weight our samples to match the answers given to the relevant social attitudes questions from the BES. The BES is the gold standard, more representative than quick-turnaround online or telephone surveys and its past-vote question (asking how people voted in 2015) came very close to the actual result when most pollsters’ re-contact studies (ours included) merely replicated their final pre-election polls and failed to recreate the Labour-Conservative gap.

However, weighting your sample based on attitudes is fraught with hazards, not least of all because we don’t have fixed, incontrovertible data-points to weight to. We have an idea of what the answers should be but, even if we have 100% accurate data, attitudes can change over time.

The other difficulty is interviewer effect. It may not account for the totality of the difference between telephone polls, online polls and the face to face survey but it is likely to be a factor, particularly on sensitive issues such as racial equality, gender equality and national identity.

For these reasons therefore the weighting targets we use are based on a rolling average including the answers to the BES questions and those that our poll produces naturally after all other weighting (including party propensity) has been added.

In essence, this ‘pulls’ our social attitude figures a little closer to the BES but, as our weighting targets are based in part on the figures our poll produces without such weighting, they remain sensitive to any underlying shifts in these attitudes and account for interviewer effects.

The net-effect of this is to produce a small but significant movement towards Remain. Our published numbers therefore give the impression of stasis when in fact they come after a movement towards Leave which is why our most recent results perhaps generated some confusion when published. Had we applied these changes to our last poll, the 4-point lead for Remain would have been a little larger.

The table below shows this:

| 31st May poll POST CHANGES | 31st May poll PRE CHANGES | 17th May poll PRE CHANGES | 26th April poll PRE CHANGES | |

| Remain | 43% | 40% | 44% | 42% |

| Leave | 41% | 43% | 40% | 41% |

This is not an ideal solution to what is ultimately a sampling problem. That will take more time and is ultimately a larger issue for the wider research industry than this one segment of it. However, given the constraints of budget and time (or, more specifically, absent the hundreds of thousands of pounds and many months necessary to conduct a randomised face to face study), we believe it is the most accurate way of determining the state of public opinion for the EU referendum.

A few other notes:

There are also some pollster-specific effects. Martin Boon at ICM has mentioned the intriguing possibility that enthusiastic Brexiters respond to online survey invitations straight away and fill their relevant demographic quotas before more Remain-leaning participants can take part. This is certainly realistic but Opinium handles demographics at the sampling stage without specific quotas so, in theory, should be less affected by this unless that same effect takes place over the entire survey period. YouGov’s system of having invitations to a general survey pool rather than specific surveys also should negate this effect. Nevertheless, it is a good illustration of the “too engaged” problem that polls have, this time at the opposite end of the age spectrum than at the general election.

Age groups. The weighting data for the most recent poll shows the use of three age groups (18-34, 35-54, 55+) which is a change from our recent policy of weighting by five age groups. This was due to a technical issue and done only because on this occasion the changes made little difference to the overall numbers. Nevertheless, future referendum polls will split out the upper age groups, as has been our policy since the general election last year.

Education. Another suggested reason for the gap between telephone and online polls is that telephone polls interview too many university graduates who tend to be significantly more pro-Remain. In many the figure is close to half whereas the actual incidence in the UK population is closer to a third. While we make no comment on what other agencies do in this area, our experience is that our samples tend to accurately reflect the proportion of graduates and non-graduates as being closer to a third of the population. This information was not included in our most recent poll but may be published alongside future releases.